Imagine an automated rating of CVs in order to decide whom to grant a scholarship or which job candidate to hire. This is not science-fiction. Big private companies increasingly rely on such mechanisms to make hiring decisions. They analyse the data of their employees to find the underlying patterns for success. The characteristics of job candidates are then matched with those of successful employees and the system recommends those candidates with most similar characteristics. Much less time and effort is needed to choose the “right” candidates from a pool of promising applicants. Certainly, the human resources department has to reflect on what characteristics to choose and how to combine and weight them, but the recommendations based on the analysis of big data seem to be very efficient.

Making automatic algorithm-produced predictions about one individual person by analyzing the information from many people is still problematic in several ways. First of all, it requires inter alia large datasets to avoid bias. Second, the demand for standardized CVs implies that non-normative ways of presenting oneself are excluded a priori. Third, assume that the characteristics of high achievers change by time. The system will continue (at least for some time) to formulate recommendations based on past experiences. The static model of prediction will be unable to detect potential innovative candidates who have divergent experiences and skills. It thus discriminates against individuals with non-standard backgrounds and motivations. A last problem is that all the data available to the model are based on the people who have been accepted in the first place and who have proven successful or unsuccessful thereafter. Nothing is known about the career paths of the applicants who had been rejected.

“In this context, data – long-term, comparable, and interoperable – become a sort of actor, shaping and reshaping the social worlds around them” (Ribes and Jackson 2013: 148). Taken from an ecological research with stream chemistry data this statement applies equally to the problem of automatic recommendation systems.

Even worse, not only the CV but the footprint one leaves in cyberspace might serve as the basis of decision-making. The company Xerox used data it has mined of their (former) employees to define the criteria for hiring new staff for its 55,000 call-centre positions. The applicants’ data gained from the screening test were compared with the significant, but sometimes unexpected criteria detected so far. In the case of Xerox for example “employees who are members of one or two social networks were found to stay in their job for longer than those who belonged to four or more social networks”.

Whether the social consequences of these new developments can be attributed to humans or also computers is highly controversial. Luciano Floridi (2013) makes the point that we should differentiate between the accountability of (artificial) agents and the responsibility of (human) agents. Does the algorithm discussed above qualify as an agent? Floridi would argue yes, because “artificial agents could have acted differently had they chosen differently, and they could have chosen differently because they are interactive, informed, autonomous and adaptive” (ibid. 149). So even if “it would be ridiculous to praise or blame an artificial agent for its behavior, or charge it with a moral accusation” (ibid. 150), we must acknowledge that artificial agents as transition systems interact with their environment, that they can change their state independently and that they are even able to adopt new transition rules by which they change their state.

The difference between accountability and responsibility should be kept in mind, so that attempts to delegate responsibility to artificial agents can be uncovered. In case of artificial agents malfunctioning, the engineers who designed them are requested to re-engineer them to make sure they no longer cause evil. And in the case of recruitment decisions companies should be very careful about how to proceed. There is no single recipe for success.

Floridi, Luciano (2013) The Ethics of Information. Oxford: Oxford University Press.

Ribes, David/ Jackson, Steven J. (2013) Data Bite Man: The Work of Sustaining a Long-Term Study. In: Lisa Gitelman, “Raw Data” Is an Oxymoron. Cambridge: The MIT Press. 147-166.

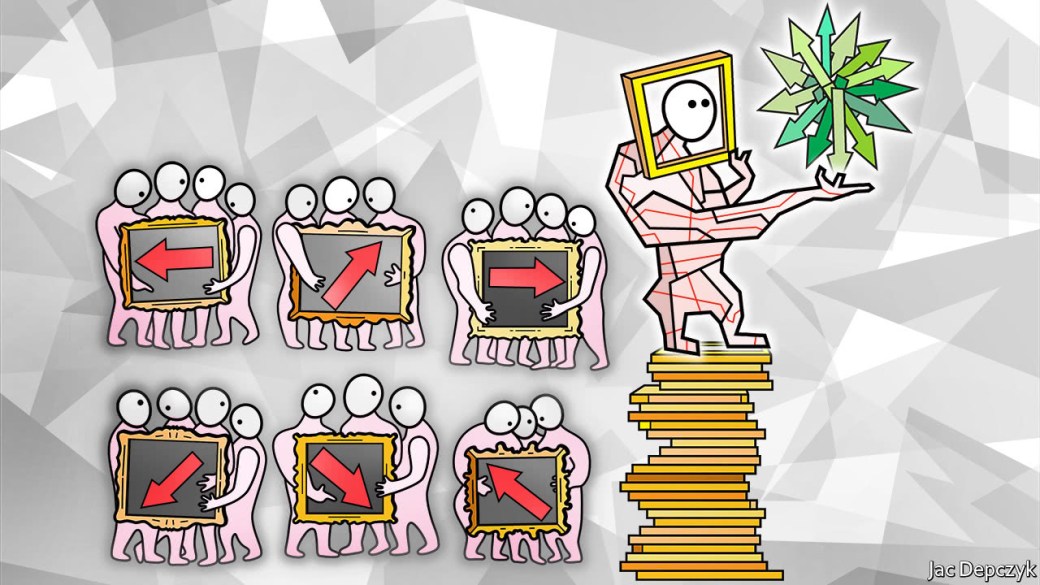

Featured image was taken from: https://cdn.static-economist.com/sites/default/files/images/print-edition/20170610_FND000_0.jpg